Origins and evolution of the Western diet: health implications for the 21st century.

There is growing awareness that the profound changes in the environment (eg, in diet and other lifestyle conditions) that began with the introduction of agriculture and animal husbandry 10000 y ago occurred too recently on an evolutionary time scale for the human genome to adjust. In conjunction with this discordance between our ancient, genetically determined biology and the nutritional, cultural, and activity patterns of contemporary Western populations, many of the so-called diseases of civilization have emerged. In particular, food staples and food-processing procedures introduced during the Neolithic and Industrial Periods have fundamentally altered 7 crucial nutritional characteristics of ancestral hominin diets: 1) glycemic load, 2) fatty acid composition, 3) macronutrient composition, 4) micronutrient density, 5) acid-base balance, 6) sodium-potassium ratio, and 7) fiber content. The evolutionary collision of our ancient genome with the nutritional qualities of recently introduced foods may underlie many of the chronic diseases of Western civilization.

EVOLUTIONARY DISCORDANCE

Evolution acting through natural selection represents an ongoing interaction between a species’ genome and its environment over the course of multiple generations. Genetic traits may be positively or negatively selected relative to their concordance or discordance with environmental selective pressures. When the environment remains relatively constant, stabilizing selection tends to maintain genetic traits that represent the optimal average for a population. When environmental conditions permanently change, evolutionary discordance arises between a species’ genome and its environment, and stabilizing selection is replaced by directional selection, moving the average population genome to a new set point. Initially, when permanent environmental changes occur in a population, individuals bearing the previous average status quo genome experience evolutionary discordance. In the affected genotype, this evolutionary discordance manifests itself phenotypically as disease, increased morbidity and mortality, and reduced reproductive succes.

Similar to all species, contemporary humans are genetically adapted to the environment of their ancestors—that is, to the environment that their ancestors survived in and that consequently conditioned their genetic makeup. There is growing awareness that the profound environmental changes (eg, in diet and other lifestyle conditions) that began with the introduction of agriculture and animal husbandry 10000 y ago occurred too recently on an evolutionary time scale for the human genome to adapt. In conjunction with this discordance between our ancient, genetically determined biology and the nutritional, cultural, and activity patterns in contemporary Western populations, many of the so-called diseases of civilization have emerged

CHRONIC DISEASE INCIDENCE

In the United States, chronic illnesses and health problems either wholly or partially attributable to diet represent by far the most serious threat to public health. Sixty-five percent of adults aged 20 y in the United States are either overweight or obese, and the estimated number of deaths ascribable to obesity is 280184 per year. More than 64 million Americans have one or more types of cardiovascular disease (CVD), which represents the leading cause of mortality (38.5% of all deaths) in the United States. Fifty million Americans are hypertensive; 11 million have type 2 diabetes, and 37 million adults maintain high-risk total cholesterol concentrations. In postmenopausal women aged 50 y, 7.2% have osteoporosis and 39.6% have osteopenia. Osteoporotic hip fractures are associated with a 20% excess mortality in the year after fracture. Cancer is the second leading cause of death (25% of all deaths) in the United States, and an estimated one-third of all cancer deaths are due to nutritional factors, including obesity

NUTRITIONAL CHARACTERISTICS OF PRE- AND POSTAGRICULTURAL DIETS

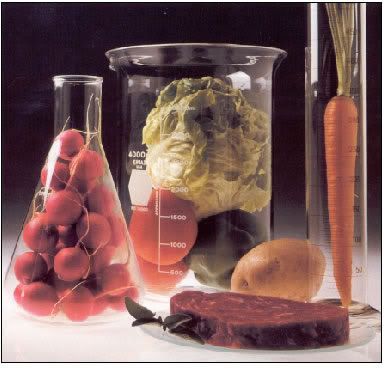

Before the development of agriculture and animal husbandry hominin dietary choices would have been necessarily limited to minimally processed, wild plant and animal foods. With the initial domestication of plants and animals, the original nutrient characteristics of these formerly wild foods changed, subtly at first but more rapidly with advancing technology after the Industrial Revolution. Furthermore, with the advent of agriculture, novel foods were introduced as staples for which the hominin genome had little evolutionary experience. More importantly, food-processing procedures were developed, particularly following the Industrial Revolution, which allowed for quantitative and qualitative food and nutrient combinations that had not previously been encountered over the course of hominin evolution.

In contrasting pre- and postagricultural diets, it is important to consider not only the nutrient qualities and types of foods that likely would have been consumed by preagricultural hominins but to also recognize the types of foods and their nutrient qualities that could not have been regularly consumed before the development of agriculture, industrialization, and advanced technology. Food types that would have generally been unavailable to preagricultural hominins. Although dairy products, cereals, refined sugars, refined vegetable oils, and alcohol make up 72.1% of the total daily energy consumed by all people in the United States, these types of foods would have contributed little or none of the energy in the typical preagricultural hominin diet. Additionally, mixtures of foods listed in Table 1 make up the ubiquitous processed foods (eg, cookies, cake, bakery foods, breakfast cereals, bagels, rolls, muffins, crackers, chips, snack foods, pizza, soft drinks, candy, ice cream, condiments, and salad dressings) that dominate the typical US diet.

Dairy foods

Hominins, like all mammals, would have consumed the milk of their own species during the suckling period. However, after weaning, the consumption of milk and milk products of other mammals would have been nearly impossible before the domestication of livestock because of the inherent difficulties in capturing and milking wild mammals. Although sheep were domesticated by 11000 before present (BP) and goats and cows by 10000 BP, early direct chemical evidence for dairying dates to 6100 to 5500 BP from residues of dairy fats found on pottery in Britain. Taken together, these data indicate that dairy foods, on an evolutionary time scale, are relative newcomers to the hominin diet.

Cereals

Because wild cereal grains are usually small, difficult to harvest, and minimally digestible without processing (grinding) and cooking, the appearance of stone processing tools in the fossil record represents a reliable indication of when and where cultures systematically began to include cereal grains in their diet. Ground stone mortars, bowls, and cup holes first appeared in the Upper Paleolithic (from 40000 y ago to 12000 y ago), whereas the regular exploitation of cereal grains by any worldwide hunter-gatherer group arose with the emergence of the Natufian culture in the Levant 13000 BP.

Domestication of emmer and einkorn wheat by the descendants of the Natufians heralded the beginnings of early agriculture and occurred by 10–11000 BP from strains of wild wheat localized to southeastern Turkey. During the ensuing Holocene (10000 y ago until the present), cereal grains were rarely consumed as year round staples by most worldwide hunter-gatherers, except by certain groups living in arid and marginal environments. Hence, as was the case with dairy foods, before the Epi-Paleolithic (10000–11000 y ago) and Neolithic (10000 to 5500 y ago) periods, there was little or no previous evolutionary experience for cereal grain consumption throughout hominin evolution.

85.3% of the cereals consumed in the current US diet are highly processed refined grains. Preceding the Industrial Revolution, all cereals were ground with the use of stone milling tools, and unless the flour was sieved, it contained the entire contents of the cereal grain, including the germ, bran, and endosperm. With the invention of mechanized steel roller mills and automated sifting devices in the latter part of the 19th century, the nutritional characteristics of milled grain changed significantly because the germ and bran were removed in the milling process, leaving flour comprised mainly of endosperm of uniformly small particulate size. Accordingly, the widespread consumption of highly refined grain flours of uniformly small particulate size represents a recent secular phenomenon dating to the past 150–200 y.

Refined sugars

The per capita consumption of all refined sugars in the United States in 2000 was 69.1 kg, whereas in 1970 it was 55.5 kg. This secular trend for increased sugar consumption in the United States in the past 30 y reflects a much larger worldwide trend that has occurred in Western nations since the beginning of the Industrial Revolution some 200 y ago. The per capita refined sucrose consumption in England steadily rose from 6.8 kg in 1815 to 54.5 kg in 1970. Similar trends in refined sucrose consumption have been reported during the Industrial Era for the Netherlands, Sweden, Norway, Denmark, and the United States.

The first evidence of crystalline sucrose production appears about 500 BC in northern India. Before this time, honey would have represented one of the few concentrated sugars to which hominins would have had access. Although honey likely was a favored food by all hominin species, seasonal availability would have restricted regular access. Studies of contemporary hunter-gatherers show that gathered honey represented a relatively minor dietary component over the course of a year, despite high intakes in some groups during short periods of availability. In the Anbarra Aborigines of northern Australia, average honey consumption over four 1-mo periods, chosen to be representative of the various seasons, was 2 kg per person per year. In the Ache Indians of Paraguay, honey represented 3.0% of the average total daily energy intake over 1580 consumer days. Consequently, current population-wide intakes of refined sugars in Westernized societies represent quantities with no precedent during hominin evolution.

In the past 30 y, qualitative features of refined sugar consumption have changed concurrently with the quantitative changes. With the advent of chromatographic fructose enrichment technology in the late 1970s, it became economically feasible to manufacture high-fructose corn syrup (HFCS) in mass quantity. The rapid and striking increase in HFCS use that has occurred in the US food supply since its introduction in the 1970s is indicated in Figure 3. HFCS is available in 2 main forms, HFCS 42 and HFCS 55, both of which are liquid mixtures of fructose and glucose (42% fructose and 53% glucose and 55% fructose and 42% glucose, respectively).

Increases in HFCS occurred simultaneously, whereas sucrose consumption declined. On digestion, sucrose is hydrolyzed in the gut into its 2 equal molecular moieties of glucose and fructose. Consequently, the total per capita fructose consumption (fructose from HFCS and fructose from the digestion of sucrose) increased from 23.1 kg in 1970 to 28.9 kg in 2000. As was the case with sucrose, current Western dietary intakes of fructose could not have occurred on a population-wide basis before industrialization and the introduction of the food-processing industry.

Refined vegetable oils

In the United States, during the 90-y period from 1909 to 1999, a striking increase in the use of vegetable oils occurred. Specifically, per capita consumption of salad and cooking oils increased 130%, shortening consumption increased 136%, and margarine consumption increased 410%. These trends occurred elsewhere in the world and were made possible by the industrialization and mechanization of the oil-seed industry. To produce vegetable oils from oil-bearing seeds, 3 procedures can be used: 1) rendering and pressing, 2) expeller pressing, and 3) solvent extraction. Oils made from walnuts, almonds, olives, sesame seeds, and flax seeds likely were first produced via the rendering and pressing process between 5000 and 6000 y ago. However, except for olive oil, most early use of oils seems to have been for nonfood purposes such as illumination, lubrication, and medicine.

The industrial advent of mechanically driven steel expellers and hexane extraction processes allowed for greater world-wide vegetable oil productivity, whereas new purification procedures permitted exploitation of nontraditionally consumed oils, such as cottonseed. New manufacturing procedures allowed vegetable oils to take on atypical structural characteristics. Margarine and shortening are produced by solidifying or partially solidifying vegetable oils via hydrogenation, a process first developed in 1897. The hydrogenation process produces novel trans fatty acid isomers (trans elaidic acid in particular) that rarely, if ever, are found in conventional human foodstuffs. Consequently, the large-scale addition of refined vegetable oils to the world’s food supply after the Industrial Revolution significantly altered both quantitative and qualitative aspects of fat intake.

Alcohol

In contrast with dairy products, cereal grains, refined sugars, and oils, alcohol consumption in the typical US diet represents a relatively minor contribution (1.4%) to the total energy consumed. The earliest evidence for wine drinking from domesticated vines comes from a pottery jar dated 7400–7100 y BP from the Zagros Mountains in northern Iran, whereas the earliest archaeologic indication of the brewing of beer and beer consumption dates to the late fourth millennium BC from the Godin site in southern Kurdistan in Iran. The incorporation of distilled alcoholic beverages into the human diet came much later. During the period from 800 to 1300 AD, various populations in Europe, the Near East, and China learned to distill alcoholic beverages.

The fermentation process that produces wine takes place naturally and, without doubt, must have occurred countless times before humans learned to control the process. As grapes reach their peak of ripeness in the fall, they may swell in size and burst, thereby allowing the sugars in the juice to be exposed to yeasts growing on the skins and to produce carbon dioxide and ethanol. Because of seasonal fluctuations in fruit availability and the limited liquid storage capacity of hunter-gatherers, it is likely that fermented fruit drinks, such as wine, would have made an insignificant or nonexistent contribution to total energy in hominin diets before the Neolithic.

Salt

The total quantity of salt included in the typical US diet amounts to 9.6 g/d. About 75% of the daily salt intake in Western populations is derived from salt added to processed foods by manufacturers; 15% comes from discretionary sources (ie, cooking and table salt use), and the remainder (10%) occurs naturally in basic foodstuffs. Hence, 90% of the salt in the typical US diet comes from manufactured salt that is added to the food supply.

The systematic mining, manufacture, and transportation of salt have their origin in the Neolithic Period. The earliest salt use is argued to have taken place on Lake Yuncheng in the Northern Province of Shanxi, China, by 6000 BC. In Europe the earliest evidence of salt exploitation comes from salt mines at Cardona, Spain, dating to 6200–5600 BP. It is likely that Paleolithic (the old stone age which began 2.6 million years ago and ended 10000–12000 y ago) or Holocene (10000 y ago to the present) hunter-gatherers living in coastal areas may have dipped food in seawater or used dried seawater salt in a manner similar to nearly all Polynesian societies at the time of European contact. However, the inland living Maori of New Zealand lost the salt habit, and the most recently studied inland hunter-gatherers add no or little salt to their food on a daily basis. Furthermore, there is no evidence that Paleolithic people undertook salt extraction or took interest in inland salt deposits. Collectively, this evidence suggests that the high salt consumption (10 g/d) in Western societies has minimal or no evolutionary precedent in hominin species before the Neolithic period.

Fatty domestic meats

Before the Neolithic period, all animal foods consumed by hominins were derived from wild animals. The absolute quantity of fat in wild mammals is dependent on the species body mass –larger mammals generally maintain greater body fat percentages by weight than do smaller animals. Additionally, body fat percentages in wild mammals typically vary by age and sex and also seasonally in a cyclic waxing and waning manner with changing availability of food sources and the photoperiod. Hence, maximal or peak body fat percentages in wild mammals are maintained only for a few months during the course of a year, even for mammals residing at tropical and southern latitude. In mammals, storage of excess food energy as fat occurs primarily as triacylglycerols in subcutaneous and abdominal fat depots.

The dominant (50% fat energy) fatty acids in the fat storage depots (adipocytes) of wild mammals are saturated fatty acids (SFAs), whereas the dominant fatty acids in muscle and all other organ tissues are polyunsaturated fatty acids (PUFAs) and monounsaturated fatty acids (MUFAs). Because subcutaneous and abdominal body fat stores are depleted during most of the year in wild animals, PUFAs and MUFAs ordinarily constitute most of the total carcass fat.

MUFAs and PUFAs are the dominant fats in the edible carcass of caribou for all 12 mo of the year. Because of the seasonal cyclic depletion of SFAs and enrichment of PUFAs and MUFAs, a year-round dietary intake of high amounts of SFAs would have not been possible for preagricultural hominins preying on wild mammals. Even with selective butchering by hominins, in which much of the lean muscle meat is discarded, MUFAs and PUFAs constitute the greatest percentage (50% of energy as fat) of edible fatty acids in the carcass of wild mammals throughout most of the year.

Beginning with the advent of animal husbandry, it became feasible to prevent or attenuate the seasonal decline in body fat (and hence in SFAs) by provisioning domesticated animals with stored plant foods. Furthermore, it became possible to consistently slaughter the animal at peak body fat percentage. Neolithic advances in food-processing procedures allowed for the storage of concentrated sources of animal SFAs (cheese, butter, tallow, and salted fatty meats) for later consumption throughout the year.

Technologic developments of the early and mid 19th century—such as the steam engine, mechanical reaper, and railroads—allowed for increased grain harvests and efficient transport of both grain and cattle, which in turn spawned the practice of feeding grain (corn primarily) to cattle sequestered in feedlots. In the United States before 1850, virtually all cattle were free range or pasture fed and were typically slaughtered at 4–5 y of age. By about 1885, the science of rapidly fattening cattle in feedlots had advanced to the point that it was possible to produce a 545-kg steer ready for slaughter in 24 mo and that exhibited "marbled meat". Wild animals and free-range or pasture-fed cattle rarely display this trait. Marbled meat results from excessive triacylglycerol accumulation in muscle interfascicular adipocytes. Such meat has a greatly increased SFA content, a lower proportion of n–3 fatty acids, and more n–6 fatty acids.

Modern feedlot operations involving as many as 100000 cattle emerged in the 1950s and have developed to the point that a characteristically obese (30% body fat) 545-kg pound steer can be brought to slaughter in 14 mo. Although 99% of all the beef consumed in the United States is now produced from grain-fed, feedlot cattle, virtually no beef was produced in this manner as recently as 200 y ago. Accordingly, cattle meat (muscle tissue) with a high absolute SFA content, low n–3 fatty acid content, and high n–6 fatty acid content represents a recent component of human diets.

SUMMARY

In the United States and most Western countries, diet-related chronic diseases represent the single largest cause of morbidity and mortality. These diseases are epidemic in contemporary Westernized populations and typically afflict 50–65% of the adult population, yet they are rare or nonexistent in hunter-gatherers and other less Westernized people. Although both scientists and lay people alike may frequently identify a single dietary element as the cause of chronic disease (eg, saturated fat causes heart disease and salt causes high blood pressure), evidence gleaned over the past 3 decades now indicates that virtually all so-called diseases of civilization have multifactorial dietary elements that underlie their etiology, along with other environmental agents and genetic susceptibility.

Coronary heart disease, for instance, does not arise simply from excessive saturated fat in the diet but rather from a complex interaction of multiple nutritional factors directly linked to the excessive consumption of novel Neolithic and Industrial era foods (dairy products, cereals, refined cereals, refined sugars, refined vegetable oils, fatty meats, salt, and combinations of these foods). These foods, in turn, adversely influence proximate nutritional factors, which universally underlie or exacerbate virtually all chronic diseases of civilization: 1) glycemic load, 2) fatty acid composition, 3) macronutrient composition, 4) micronutrient density, 5) acid-base balance, 6) sodium-potassium ratio, and 7) fiber content. However, the ultimate factor underlying diseases of civilization is the collision of our ancient genome with the new conditions of life in affluent nations, including the nutritional qualities of recently introduced foods.

1 Comments:

yeezys

nike huarache

supreme hoodie

yeezy boost 350

kobe byrant shoes

moncler coat

nike air max

westbrook shoes

kd 11 shoes

kyrie 6 shoes

Post a Comment

<< Home